For marketers seeking to optimize their media mix, an old stalwart has been making a comeback as of late: The humble marketing mix model (MMM).

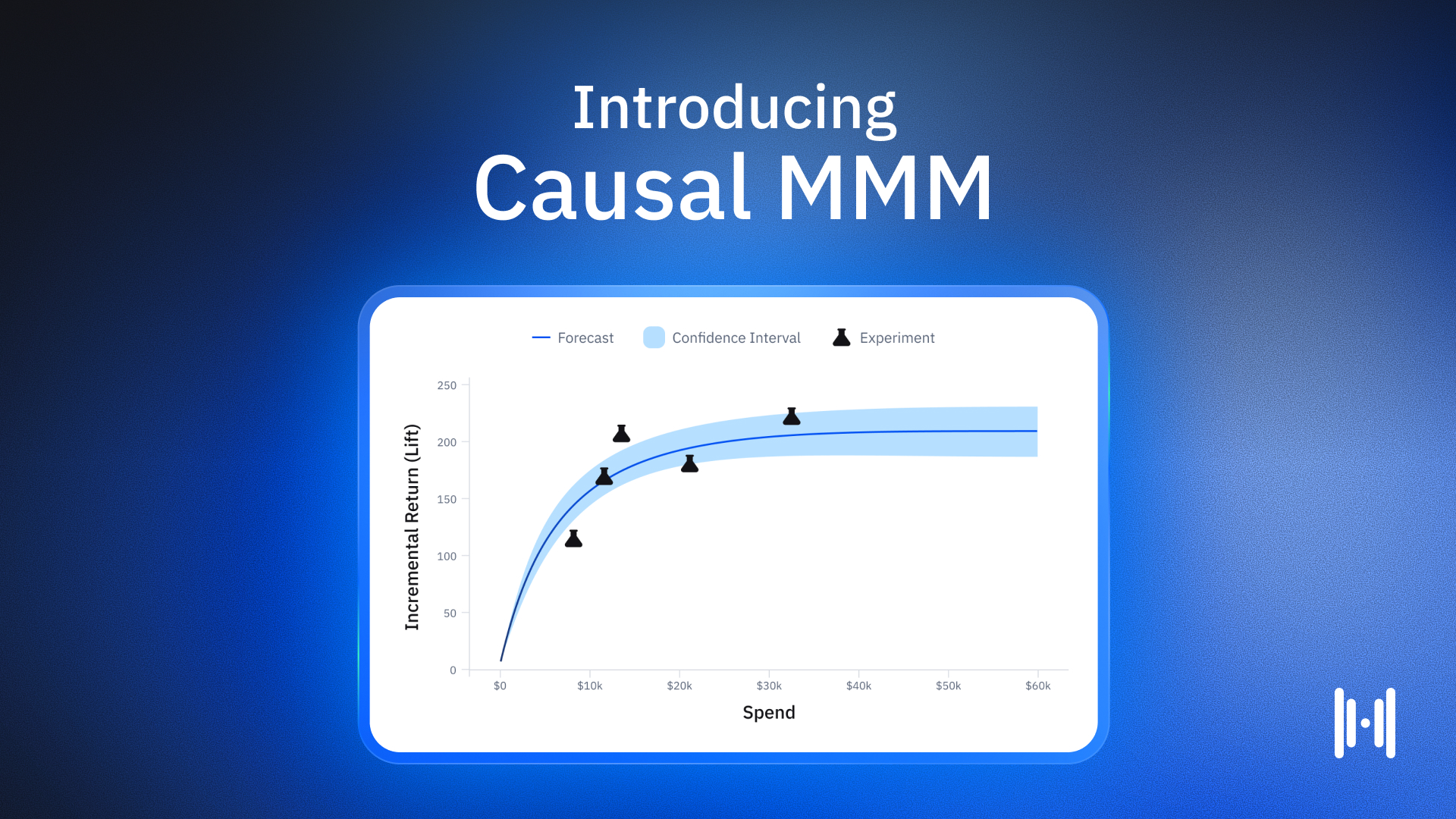

Traditional MMMs use backwards-looking regression analysis or other similar techniques to understand the impact that things like seasonality, economic conditions, and competitive activity can have on marketing. The idea is to provide a more accurate picture of paid media effectiveness, but the result is… well, fuzzy at best. Haus is building a new kind of MMM: Causal MMM — a model that’s uniquely driven and tuned by incrementality experiments.

Traditional MMMs aim to help marketers understand how to allocate their paid media dollars based on past performance and variable signals. We’ve historically been pretty critical of traditional MMMs — they’re expensive, require enormous amounts of data (usually at least two years’ worth), hard to act on, opaque about their methods and math, and don’t actually tell you what’s causing business outcomes.

That’s where Causal MMM comes in.

Shortly after we rolled out our Causal MMM news, a brand asked us a great question: “Why is it called Causal MMM?” The answer is because it’s rooted in causality. Cause and effect. Incrementality experiments identify your marketing tactics causing business outcomes, and Causal MMM automatically uses those experiment results to tune a forecasting model to help you efficiently allocate budget. Causal MMM isn’t rooted in historical correlational data — it’s rooted in causal reality.

How do experiments strengthen an MMM?

Let’s start with the basics: Incrementality experiments help determine whether an observed outcome happened because of a specific action. Did the plant grow because you added fertilizer, or would it have grown anyway? Did this customer purchase because of a YouTube ad, or would they have purchased anyway? You get the idea.

In its simplest form, incrementality experiments — also known as geo tests or lift tests — work by comparing outcomes between a treated group (people exposed to an ad) and a control group (people not exposed). This establishes causality — the treatment and control groups are characteristically indistinguishable, meaning the only variable changing is the marketing tactic being tested.

Traditional MMMs, on the other hand, aren’t designed to establish causality. Instead, they aim to help brands figure out where to spend based on historical data, taking into account things like seasonality, promotional periods, etc. — allegedly. One of their fundamental shortcomings, though, is the classic problem of multicollinearity.

Without going full ham on a stats lecture, multicollinearity describes the pain of being unable to untangle correlations. Here’s a hypothetical scenario:

* Business revenue is increasing

* Spend is increasing in Channel A

* Spend is also increasing in Channel B

Where should you put your money? Traditional MMMs aren’t able to distinguish whether revenue is increasing because of Channel A or because of Channel B (or, hypothetically, because of neither!). Feed this scenario into two different MMMs, and you might get two opposite recommendations on where to invest your budget. Suddenly this tool that’s meant to be helpful… isn’t so helpful. What are you supposed to do in this situation?

By using a traditional MMM that's not foundationally powered by experiments, you’re allowing a statistician to make a bunch of assumptions about your marketing efficiency without grounding it in any sort of verifiable truth.

This is exactly where experiments come into play. In building a model off of incrementality results — not just layering them on as an afterthought — the model is powered and tuned by the tactics causing business outcomes for your brand. The result is a tool that isn’t just a mysterious black box — it’s transparently built, tuned, and ready for action.

What are the use cases for Causal MMM?

There are lots of reasons why a brand might want to consider Causal MMM, but here are a few core use cases.

Better understand seasonality and promotional periods

If an incrementality test is run during high season (hello, Q5!), its results may not apply during the off-season. Causal MMM can be used as you gain continuous reads on incrementality throughout the year, even as buying cycles change. So instead of drawing correlational conclusions based on seasonal high or low periods, you can test regularly, know what’s causing outcomes throughout the year, and have these results continuously inform spend decisions.

Inform incrementality testing roadmaps

Causal MMM will recommend new experiments to run based on your business goals, budget, strategy, and other variables — in order of importance. No longer are you in the dark trying to figure out how the heck a model is recommending a specific output — with Causal MMM, you can clearly get a sense of what’s tuning the model and incorporate strategic experiments to gain more precision. We’ll even let you know when it’s time to rerun an experiment.

Assess your media mix strategy – sensibly

Causal MMM allows brands to break out each channel in the practical ways you think about them. For example: customize by how you group each platform or strategy, i.e. Retargeting/bottom-of-funnel vs. Prospecting/top-of-funnel.

Answer “what if?” questions

What would happen if you moved $1M from Meta to YouTube? Is there a more efficient split of your existing budget? Use Causal MMM’s Budget Playground to see where and how your money is best spent — or not spent.

How do I get started with Causal MMM?

Incrementality-powered MMM. Test-fueled MMM. Experiment-driven MMM. Call it what you want, but we’re calling it what it is: Causal. A true causal MMM doesn’t just layer experiments on top of an otherwise opaque model – a true causal MMM is driven by your tests, and always sharpening based on your results.

In Haus’ closed Causal MMM beta, we're seeing Causal MMM outperform traditional models on precision, speed, and user understanding. While Causal MMM isn’t generally available yet, stay up to date here for news of its official launch later in 2025.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)