Let’s hit pause for a moment. Set aside what you’re working on, tune out any distractions, then answer this question: In this very moment, what is your absolute #1 goal as a marketer?

Chances are, the answer came to you quickly. If you said “Improve overall marketing efficiency,” you certainly aren’t alone. In our recent industry survey, 61% of respondents said the same thing. You also aren’t alone if you said growth in ecommerce (36%), growth in retail (21%), or growth on Amazon (8%).

Whether you’re after efficiency or growth (or both), incrementality testing is an increasingly essential tool in the modern marketer’s toolkit. After all, incrementality testing does something 2010s measurement darlings like last-click attribution never could: It measures causation. Is your marketing causing more customers to convert?

Using control groups, incrementality tests isolate the actual impact of your campaigns — and filter out the customers who would have converted anyway. While that might sound technical, it’s a surprisingly intuitive process once you grasp the fundamentals — so let’s dive in.

What is incrementality testing?

Incrementality testing uses a control group to isolate and measure the causal impact of your marketing campaign.

What’s a control group? It’s just a portion of your audience that sees no ad campaign. So you might compare conversion rates among the 80% of people who saw your ad (test group) versus the 20% who didn’t (control group). If those who saw your ad convert at a higher rate, your marketing campaign is incremental.

Incrementality testing functions similarly to randomized control trials in drug discovery. A pharmaceutical company gives their drug to one portion of test subjects, then a placebo to the others to test the true effectiveness of the drug.

For more on the basics of incrementality testing, visit Incrementality School. Nope, no homework at this school — just snackable info about the ins and outs of incrementality, designed by Haus experts.

Causality: the heart of incrementality testing

Traditional measurement tools like last-click or multi-touch attribution look at patterns in your data and assign credit. Naturally, a lot of what they measure is statistical noise. After all, a spike in conversions could happen for various reasons — seasonality, channel overlap, or external events.

But if you accidentally attribute that spike to your new campaign, then decide to double down…you’re lighting ad dollars on fire.

“If you don’t have a sense of the incrementality of your channels, you’re likely spending a lot of money that is not driving any actual value for the company,” says Haus Measurement Strategist Nick Doren. “You’re paying for things that are already likely to convert anyway, so you’re hurting the bottom line of the company.”

Incrementality testing measures causal impact so that you can invest confidently in channels that drive actual impact. Working with an incrementality partner who takes test design seriously only elevates that level of confidence. That might mean using synthetic controls like Haus does to more exactly mirror your control and treatment groups.

Wondering which incrementality partner is right for your brand? Our incrementality testing buyer’s guide offers some useful guidance.

Incrementality vs. A/B testing: What’s the difference?

If you’re a marketer, you’re probably familiar with A/B testing. It measures performance between two variants of the same experience — two landing pages, two email subject lines, etc.

A/B testing is a trusted tool for many marketers — but it shouldn’t be confused with incrementality testing. Incrementality testing differs by measuring whether the experience or campaign had any effect. It does so by comparing performance with your campaign versus performance without your campaign.

While these methods are different, they’re complementary. You might use incrementality testing to figure out whether Meta ASC moves the needle for your brand — then you use A/B testing to pinpoint the more effective Meta campaign creative. For more on how A/B testing and incrementality testing differ (but work together), check out our deep dive on the topic.

Questions that incrementality testing can answer

Incrementality testing shines when you need clear answers about campaign performance. Here are a few common use cases:

- Is your new channel driving impact for your business?

- Should you include or exclude branded search terms in PMax campaigns?

- When has your ad spend hit the point of diminishing returns?

- Are customers converting on your site, on Amazon, or at retail locations?

- What’s the right media mix optimization for incremental growth?

- How incremental are YouTube ads for your brand?

- Which paid marketing efforts are most incremental during peak seasonal periods?

Look for an incrementality partner with talented growth experts who can help you get the most out of your measurement strategy.

“We’ll push our customers,” says Haus Measurement Strategist Alyssa Francis. “We’re not afraid to tell them straight up, ‘That won’t be a valuable learning for you — we recommend this instead.’ We find things really click when you run interesting tests.”

At Haus, brands are empowered to push the status quo and answer the big questions — then they’re using those insights to improve the bottom line.

Distinct ad campaigns require distinct incrementality tests

When designing an experiment, you must decide how many variables you want to test and whether or not you need a control group. The nature of the question you’re trying to answer will determine these structural details.

Let’s dive into different structures for holdout tests on the Haus platform.

2-cell experiment with a control group

What it does: Offers a controlled environment for assessing the causal impact of a specific marketing treatment by comparing it against a control group

When to use it: To measure the impact of your ads on a key channel compared to a group receiving no ads

Example: Ritual ran a series of 2-cell with holdout tests when they were testing TikTok as a new channel

2-cell experiment without a control group

What it does: Essentially an A/B test in which two variables are tested against one another. You will learn if cell A or cell B performs better than the other (but won’t measure incrementality)

When to use it: You already know the channel is incremental, and you want to optimize its performance

Example: Testing two different types of creative for your new Meta campaign

3-cell experiment with a control group

What it does: Two treatments are tested against a control to measure which treatment is most incremental (while the control measures the incrementality of each treatment)

When to use it: You’re trying to determine optimal spend level or want to compare tactics to determine which is the most incremental

Example: Caraway ran a 3-cell with holdout test on Google to see which was more incremental- PMax with Branded or PMax without Branded

3-cell experiment without a control group

What it does: Compares three variables against one another to learn which performs best. Doesn’t measure the incrementality of these three variables since a control is required to identify business lift

When to use it: You want to know which treatment is the most impactful or better understand the diminishing marginal returns curve

Example: FanDuel ran a 3-cell without a holdout to determine whether they had room to spend more on YouTube efficiently or if they would hit their diminishing marginal returns curve

The typical process for incrementality testing

The incrementality testing journey often starts with a question. This “question” is more aptly known as your hypothesis. For instance, “We think our audience is on TikTok and feel it could be an effective channel for us” is a clear hypothesis. You can then design and run an incrementality experiment to test this hypothesis.

Here’s how the process typically breaks down:

- Define the hypothesis. What exactly do you want to know? This might mean confirming a hunch or finally getting clarity on a gray area that’s confused your team for a while.

- Design the test. Considering your business goals and challenges, you’ll decide how long to run your test, how big of a holdout you’ll need, and how many treatment and control groups you need. For instance, a diminishing returns test often calls for three groups, while a new channel test often calls for two.

- Run the experiment. Your test will be most effective if you work with a partner with a dedicated team of econometricians and machine learning experts who can clean your data thoroughly and use the latest frontier methods in causal inference to isolate variables precisely.

- Measure the lift. The fun part is diving into results and finally getting answers to those nagging questions. Most crucially, you’ll want to look at incremental lift, which calculates how conversion rates among your control group compare to your treatment group.

- Act on the results. With your results, you can confidently reallocate budget, kill ineffective tactics, and scale what’s working. (We were wrong. This is the fun part.)

Example: Testing Google PMax across three cells

Hypothesis: A DTC brand wanted to understand how effective Google’s Performance Max (PMax) campaigns were — and whether increasing spend would drive incremental lift.

Design: They ran a 3-cell experiment:

- Cell A (Control): No PMax spend.

- Cell B (Baseline): Current PMax budget.

- Cell C (High spend): 50% higher PMax budget.

Result:

- Cell B delivered a moderate lift over Cell A, proving PMax was adding value.

- Cell C showed a stronger lift, but with diminishing returns starting to appear.

The test confirmed that PMax was incremental — but also highlighted the sweet spot for spend. By scaling judiciously, the brand avoided wasting dollars on the tail end of the curve.

The impact? They reallocated budget to hit that performance sweet spot — boosting overall efficiency without overspending.

Practical results require a clear experiment design

Not all incrementality testing tools are created equal. Some rely on black-box attribution or dated methods — so setup drags on and your results stay murky.

When you’re evaluating platforms, look for:

- Transparent methodology. You should understand the math behind your results. Read why Haus is so passionate about transparency in marketing measurement.

- Flexible test design. 2-cell tests, 3-cell tests, post-treatment windows — you need options. Here’s how a series of Haus tests helped one brand zero in on the effectiveness of brand search.

- Fast setup. You shouldn’t spend weeks getting a simple test live.

- Clean reporting. Share tweaks and results with your team in clear visuals.

How Haus designs incrementality tests

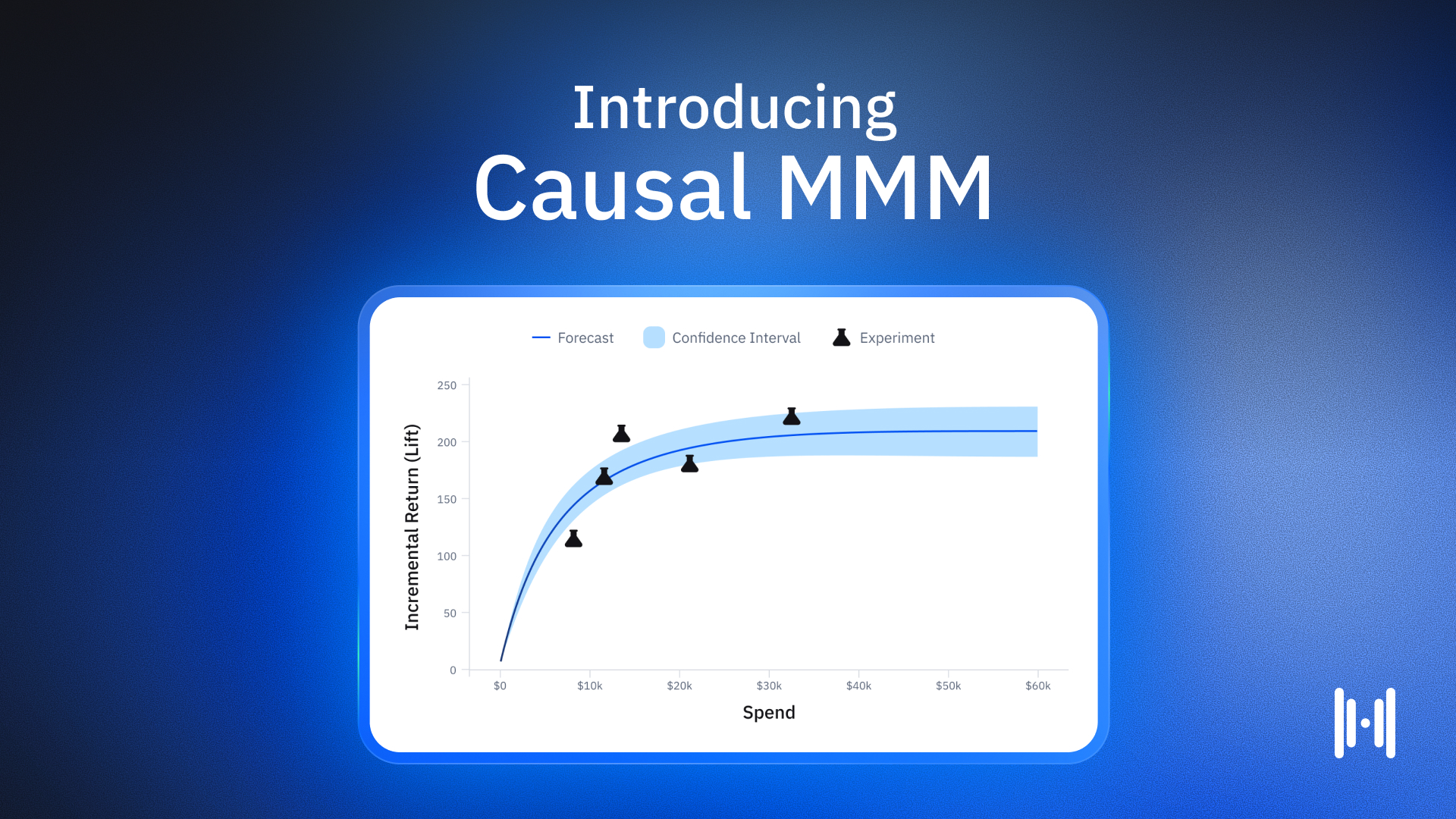

At Haus, we build tests around synthetic control: an advanced modeling method that constructs control groups that are characteristically nearly identical to your test group. This leads to results that are 4X more precise than those produced by matched-market tests.

Haus isn’t just the most precise solution. It’s also a true incrementality partner. This means you’ll work with a dedicated team of Measurement Strategists who help you make the most of your learnings, then turn them into improved business outcomes.

Ready to see it in action? Check out our incrementality use cases or get started with our step-by-step guide.

Frequently Asked Questions about Incrementality Testing

What is incrementality testing, and how does it use a control group to measure campaign impact?

Incrementality testing measures the causal impact of a marketing campaign by comparing conversion rates between a test group that sees the ads and a control group that does not, isolating the lift driven by the campaign.

Why is causality — the ability to measure true lift — important compared to traditional attribution methods like last-click?

Traditional attribution assigns credit based on correlation and often misattributes seasonal spikes or overlapping channels, whereas incrementality testing filters out conversions that would have happened anyway, preventing wasted ad spend and revealing true performance.

How do 2-cell and 3-cell experiment structures differ, and when would you use each on the Haus platform?

A 2-cell test with a control group compares one treatment to no ads (ideal for new channel validation), while a 3-cell test with a control lets you compare two treatment levels against a holdout (perfect for testing spend levels or tactic comparisons).

What are the main steps of a typical incrementality testing process from start to finish?

First you define a clear hypothesis, then design the test (holdout size, duration, cells), run the experiment using robust data-cleaning and modeling, measure the incremental lift, and finally reallocate budget or optimize tactics based on the results.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)