Incrementality School, E5: Randomized Control Experiments, Conversion Lift Testing, and Natural Experiments

Sure, the title's a mouthful – but attributing changes in data (ex: ‘my KPI went up') to certain factors (ex: ‘we increased ad spend’) is hard to do well.

Emily K. Schwartz

•

Nov 21, 2024

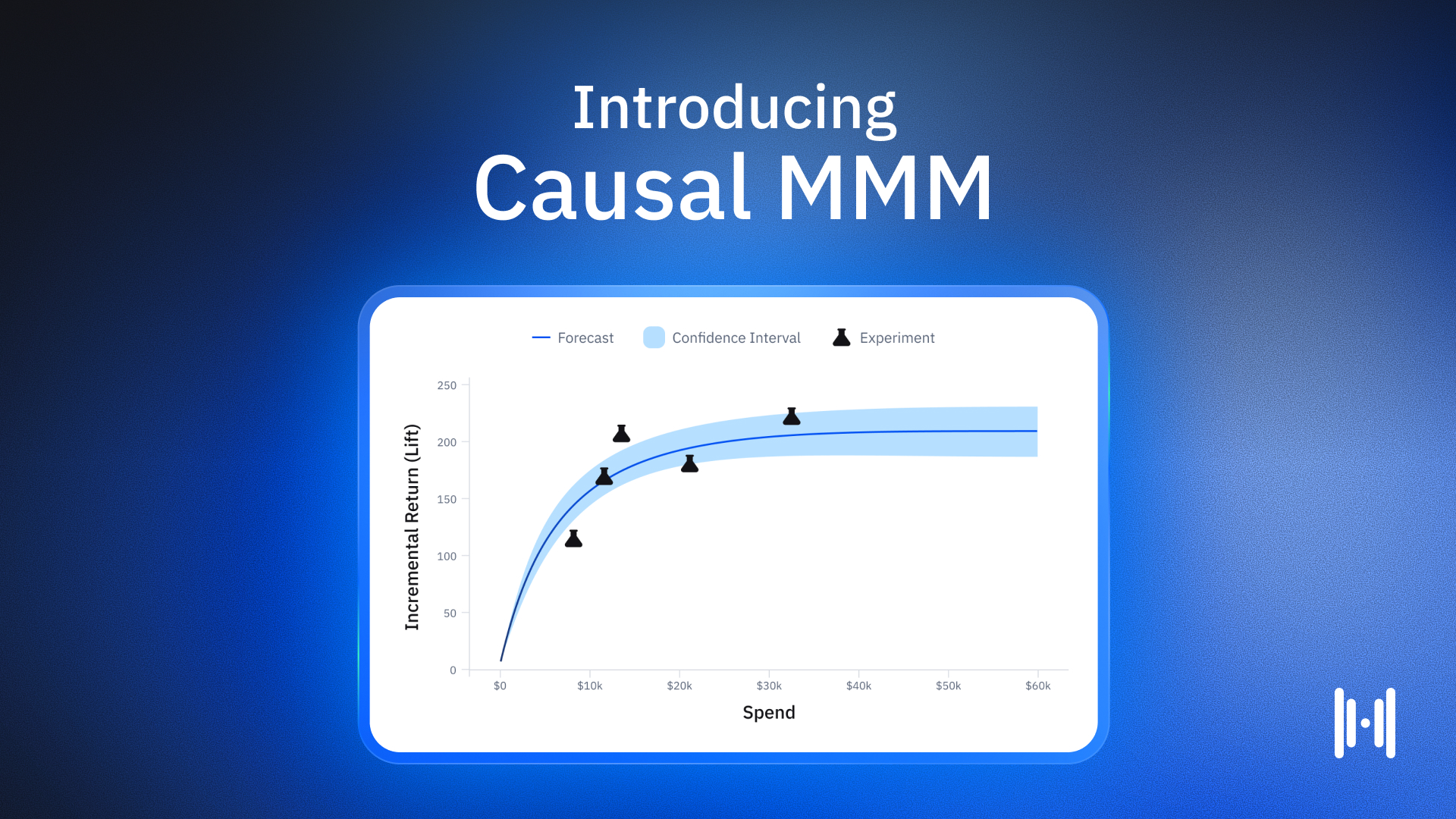

We talk a lot about experiments at Haus. After all, our thing is that we enable brands to design and launch tests in minutes to measure what marketing efforts are actually driving incremental results – scientifically and objectively.

(Say that three times fast.)

In our previous Incrementality School installments, we defined incrementality, spelled out what you can actually test, looked at how brands measure incrementality, and most recently, covered the criteria that often makes a brand a prime candidate for incrementality testing.

That’s a lot of covered ground – but every once in a while, we field questions about experiments more broadly. What’s so special about geo experiments, anyway? What about other types of experiments out there?

That’s what we’re here to cover in this fifth installment of Incrementality School. Let’s get into it.

The gold standard in causal inference

“Attributing changes in data (ex: ‘my KPI went up or down’) to certain factors (ex: ‘we upped spend in an ad channel’) is incredibly hard to do well, and extremely easy to screw up,” explains Haus’ Economist Simeon Minard. “The world is huge and chaotic, and there are a million things that could be messing up your measurement.”

So what’s a marketing team to do? This is where an economist like Simeon might recommend randomized control experiments – otherwise known as the “gold standard of causal inference.”

“When you do a true, well-designed randomized control experiment,” he explains, “You end up with data that has variation caused by 1) the true effect of your action (think ad channel) and 2) random noise. It turns out we understand the math of random noise really well, and this makes it possible to get an excellent read of what the true effect was – along with how certain we are about it.”

At Haus, we use stratified random sampling to ensure balanced treatment and control groups – no selection bias. Factor in our use of synthetic controls and models optimized for marketing contexts, and even the largest markets can be included in test designs without distorting results. There’s the gold standard.

Where conversion lift testing fits into the equation

If you’re advertising on ad platforms like Google and Meta, the question of conversion lift testing might be lingering in the back of your mind. Conversion lift testing is when an ad platform adjusts whether they share your ad to a customer at the individual level, and can be used to measure platform-specific incrementality. This involves using conversion pixels – so brands can understand if a customer visited their website and took an action like making a purchase or entering a lead flow after seeing or engaging with an on-platform ad.

While conversion lift testing is typically highly powered, it doesn’t capture view-through or omnichannel halo effects. For example, “If someone watches a YouTube ad on their TV and then goes and takes an action based on that ad on a different device, that ‘view-through’ conversion isn’t captured,” explains Haus’ Head of Science Joe Wyer. “If they take action in an additional sales channel like retail or on Amazon from exposure to that YouTube ad, that’d also be missed with conversion lift testing.”

While conversion lift testing can be a beneficial tool in a testing toolbox, the approaches that platforms use to develop these tools tend to be inconsistent. “The modeling methodologies tend to be like a Rube Goldberg machine behind the scenes,” says Joe. In practice, that may mean that the data these platforms consider and how they analyze that data to influence results can differ across the board. “At the end of the day, you have to remember these platforms are grading their own homework.”

How natural experiments stack up

“You don’t always have experiments that were made with intention,” explains Joe. “With something like GeoLift or conversion lift testing, you’re trying to randomly vary what you’re doing across some dimension – be it place or people – that you have control over.”

With natural experiments, that control is removed – and serendipity takes over.

For example, let’s imagine there’s a random, unpredictable outage on your website that impacts a specific user flow in a certain region. How does this outage impact sales? Or does it? What can you learn from this? Maybe it had a disproportionate impact – or maybe it didn’t even impact sales numbers at all. This is an example of a natural experiment.

Natural experiments can be remarkable learning opportunities – but as Joe reminds us, “How many natural experiments are going to show up for your business on the things that are really important for you to make decisions on?” In other words: Don’t bank on them.

“It takes a ton of effort to find cases that are really natural experiments,” stresses Simeon. “And it takes a ton of expertise to actually get good measurements from them.”

Orienting towards outcomes

“A lot of people use the term ‘experiment’ to mean ‘change something and see what happens,’ says Simeon. “But when it comes down to it, if you don’t do this in a way that is set up to actually measure the outcome, you might just be running in circles.”

And there you have it. In our next Incrementality School: what to actually do with incrementality test results and how to take action on them.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)