You’re watching your favorite show when a catchy new car ad flashes across the screen. Later, scrolling Instagram, you spot a sleek campaign from the same brand. A few days after that, you pass a billboard on your commute featuring the exact same car.

When sales go up that quarter, which channel gets the credit? The TV spot? Instagram? The billboard? The truth is, it’s nearly impossible to untangle the impact of each touchpoint just by looking at platform reports. Each channel claims victory, leaving marketers stuck with overlapping numbers, inflated ROI claims, and no clear path forward.

Experiments are great at calculating the incrementality of geosegmentable channels. But what about when you want to make budgeting predictions across your entire media mix — including non-geosegmentable channels? This is where Marketing Mix Modeling (MMM) comes in.

Instead of relying on self-reported platform data or guesswork, MMM uses statistical modeling to analyze historical spend and outcomes, teasing apart the real contribution of each channel. Done well, it tells you not just what worked, but how to allocate your next dollar for maximum impact.

MMM used to be only available to the largest CPG-focused brands. But MMM technology is being increasingly democratized and making a comeback as one of the most popular forms of marketing measurement.

This guide will walk you through the fundamentals of MMM: What it is, what inputs it needs, the outputs you can expect, common pitfalls, and how modern MMMs are evolving to serve today’s digital-first brands.

Let’s dive in.

What is marketing mix modeling (MMM)?

At its core, a Marketing Mix Model is a statistical model that quantifies the relationship between marketing strategies and business outcomes. In plain language: It looks at how your marketing spend influences sales, revenue, or other key performance indicators (KPIs).

An MMM does this by analyzing historical data and teasing apart the contribution of different channels — whether that’s Meta prospecting ads, linear TV, direct mail, or paid search. The model then estimates the efficiency (ROI) of each channel and helps businesses optimize their overall marketing mix.

Rather than relying on intuition, MMM provides a structured way to measure impact at a channel level. Done well, it allows teams to simulate scenarios — like what happens if you cut TV spend by 20% and shift it into TikTok — and forecast outcomes with confidence.

For example, a beauty brand might use an MMM to analyze the sales performance of a new product line in relation to their paid media investments. Maybe they find that Google PMax campaigns delivered the most efficient cost per incremental acquisition (CPIA), so they decide to allocate a greater portion of their ad budget toward that channel. To do so, they might pull spend away from channels that are delivering a less efficient contribution to sales. They also might use the MMM's scenario planning capabilities to simulate other potential budget allocations.

Inputs: what data feeds an MMM

An MMM is only as good as the data you put into it. Generally, there are three major categories of inputs:

- Channel-Level Spend: Both digital and offline investments: Meta ads, Google Search, TV spots, radio, out-of-home, direct mail, influencer marketing, and beyond.

- Business KPIs: The dependent variables your business actually cares about. For retailers this might be revenue or transactions; for subscription businesses it might be new sign-ups or conversions.

- External Factors: Seasonality, promotions, macroeconomic shifts, competitive activity, or major one-off events. For instance, a holiday weekend, a global pandemic, or a viral mention on a podcast can all impact outcomes independently of spend.

The right data foundation allows an MMM to “control for noise” and isolate the true incremental lift from marketing activities.

Outputs: what an MMM tells you

Once built, a good MMM can answer several critical questions:

- Channel Contribution: How much did each channel add to overall outcomes? Measuring incremental sales lift for each channel can give you the insights needed for effective budget reallocations.

- ROI & Marginal Returns: For every additional dollar spent, how much revenue can you expect? At what point does a channel saturate and returns diminish?

- Scenario Simulations: What if you shifted 15% of your search budget into video? What if you doubled your TV spend during Q4? MMMs enable marketers to model “what-if” scenarios before making costly moves in the real world.

The real power here is optimization. Instead of guessing, MMM provides evidence-backed guidance on where to invest next. It’s not a crystal ball, but it can help savvy marketers make educated decisions about where to invest.

A brief history of MMM

Marketing Mix Modeling (MMM) has been around longer than most digital marketers have been alive. It first emerged in the 1960s, when consumer giants like Procter & Gamble and Coca-Cola needed a way to measure the impact of TV, radio, and print. With no click data to lean on, statisticians turned to regression models, comparing changes in ad spend with changes in sales. For decades, MMM was the gold standard for big brands with steady budgets and plenty of patience.

But there was a catch: Those early models were slow, expensive, and often shrouded in mystery. Reports took months to produce, relied heavily on expert consultants, and were often out of date by the time they reached decision-makers.

The digital era didn’t kill MMM — it just pushed it into the background as attribution and user-level tracking took center stage. Now, with cookies disappearing and privacy regulations rising, MMM is back in the spotlight. Armed with Bayesian methods, open-source tools, and faster update cycles, modern MMMs are lighter, more agile, and better suited for today’s fast-moving brands

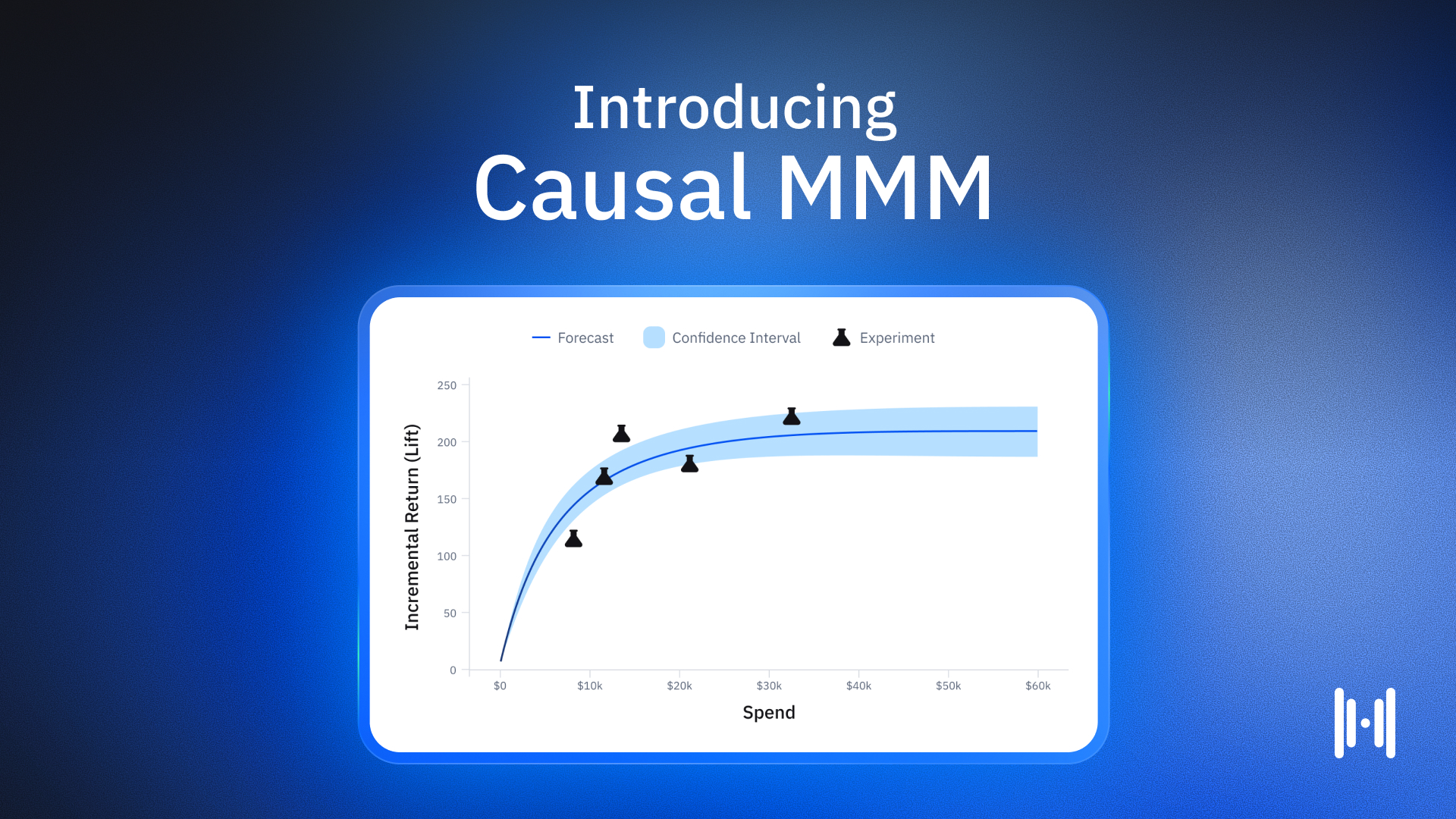

The newest evolution of MMM is the causal MMM — models that anchor themselves in experimental data, ensuring their predictions are grounded in true cause-and-effect rather than correlation alone.

Types of MMMs

Not all MMMs are created equal. Here’s a breakdown of the main flavors:

Legacy MMMs

Large, correlation-based models updated infrequently (quarterly or annually). They often involve long lead times, heavy expert involvement, and wide variable selection including macroeconomic factors.

- Best suited for: Stable, enterprise brands with consistent business patterns.

- Pros: Bespoke insights, highly detailed, enterprise-ready.

- Cons: Expensive, slow, opaque methodologies, often underweight experimentation.

Open Source MMMs

How they work: A new wave of open-source frameworks (e.g., Robyn, LightweightMMM) has democratized access to MMM. These allow internal teams to build their own models with flexibility and transparency, but they often require significant technical expertise to implement and maintain.

- Best suited for: Brands with an in-house data science team, expert knowledge and limited budget or brands looking for a simple MMM.

- Pros: Relatively inexpensive with the right team in place to support.

- Cons: Limited support, requires internal knowledge, unlikely to be a best-in-class solution

Upstart MMMs

A growing category of SaaS platforms providing more user-friendly MMMs, often updating on a weekly or monthly cadence.

- Best suited for: Digital-first or fast-moving brands that need agility.

- Pros: Easier to implement, quicker updates, built for action.

- Cons: Many still underweight experimentation or over-rely on black-box assumptions.

The core challenge MMMs face: multicollinearity

One of the trickiest hurdles in MMM is multicollinearity — when too many variables move in lockstep. Picture this: Your company launches a big sales push. At the same time, you dial up Meta prospecting, run a burst of TV ads, and increase paid search. Revenue climbs — but was it the ads? The promotion? Or just the natural momentum of your brand? To a statistical model, those signals blur together, making it hard to pinpoint which channel truly drove growth.

Vendors typically tackle this problem by constraining the data — making careful assumptions, simplifying relationships, and forcing the model into a structure that can be more easily solved. It’s tidy, but often artificial.

At Haus, we believe the better path is getting better data. By layering in experiments, external priors, and benchmarks, we strengthen the model with signals grounded in reality. Instead of guessing which channel gets the credit, we let evidence cut through the noise.

Priors: guardrails for better models

A prior is essentially a guardrail for what the model believes is plausible. MMM data is often noisy, so priors prevent the model from drifting into unrealistic territory.

- Example: A prior might suggest that branded search typically has an incremental return on ad spend (iROAS) between 0.1 and 3.0. The model then uses observed data to refine that belief.

- Why it matters: All Bayesian MMMs require priors. If a vendor didn’t ask you for them, they were quietly making assumptions about your business behind the scenes.

Different vendors take different approaches:

- Black box: They pick priors for you, often without transparency.

- Finger in the wind: They rely on customer instincts rather than evidence.

- Status quo: They simply reuse last year’s results.

At Haus, we believe every strong model should be anchored in reality — not just statistical patterns. That’s why our approach to MMM starts with the gold standard: experiments. When you can run a clean incrementality test, you get causal truth. No assumptions, no guesswork — just hard evidence of what’s working.

Of course, not every channel can be tested in real time. That’s where we bring in external priors. Think of these as carefully chosen benchmarks from credible sources. And for the channels that haven’t yet been directly tested? We rely on the most relevant data we have to our customers’ business in order to set priors. In other words, we use the best available evidence at every step, layering rigor and context to make sure the model stays realistic, reliable, and useful for decision-making.

Controls: accounting for external factors

Even the best campaigns don’t happen in a vacuum. Sales can spike because you launched a killer ad — but they can also spike because something entirely outside of marketing grabbed attention. MMMs account for these outside forces through controls.

Some controls are historical one-offs — the kind of lightning-in-a-bottle events you couldn’t possibly predict. Imagine your brand gets a surprise shoutout on the Joe Rogan podcast. Sales jump overnight, but not because of any carefully planned media buy. An MMM needs to recognize that for what it is: a unique blip in history.

Others are ongoing and predictable — holidays, annual promotions, or the natural ebb and flow of competitive seasonality. These factors can and should be accounted for, because they reliably influence business performance.

Legacy MMM providers often throw the kitchen sink at controls, layering in proprietary datasets on everything from weather patterns to macroeconomic markers. It sounds impressive, but more data isn’t always better. Each added dataset comes with cost, complexity, and the risk of muddying the signal.

A smarter approach is restraint: focus on the controls that actually move the needle for your business. That way, your MMM stays lean, accurate, and actionable — without drowning in noise.

Conclusion: the future of MMM

MMM has made a comeback — this time faster, clearer, and more actionable than ever. But not all MMMs are created equal. The strongest models are rooted in causality, anchored to the gold standard of geo experiments to separate true impact from mere correlation. When built on this foundation, MMM transforms from a backward-looking report into a forward-looking compass, giving marketers the confidence to allocate spend intelligently, defend budgets internally, and scale with precision.

Marketing Mix Modeling (MMM): Frequently Asked Questions

What is Marketing Mix Modeling (MMM)?

Marketing Mix Modeling (MMM) uses linear regression modeling to calculate how different marketing channels (e.g. TV, Meta, Google, out-of-home) contribute to your primary KPIs (such as sales, orders, subscriptions). Using this connection between marketing tactics and ROI, companies can better understand how to allocate their marketing budget to maximize efficiency and overall business performance.

How does MMM differ from multi-touch attribution (MTA)?

The first main difference relates to privacy-durability: MMM uses historical data, while MTA tracks user-level digital behavior across marketing touchpoints to attribute tactics to outcomes. MTA often assigns "credit" to different marketing tactics, while MMM calculates, holistically, how your media mix contributes to sales. Teams often use attribution models to validate the recommendations of their MMM.

How often should MMM be updated?

You need an MMM that keeps up with the pace of your business. Oftentimes, companies update their MMM every few months, but sometimes this isn't enough to reflect new pricing, new products, or new marketing conditions. That's why Causal MMM refreshes weekly so that you can take an iterative, dynamic approach to budgeting and strategy.

How can MMM inform future marketing decisions?

It's simple: MMM helps you pinpoint the channels that are moving the needle for your brand — and the ones that aren't. Based on your MMM results, you can reallocate spend from inefficient channels to more efficient channels. Scenario planning makes it easy to forecast how different budget allocations might perform and what would happen if you pursued certain spend mixes.

Is MMM still relevant in a cookieless world?

Yes — in fact, MMM is making a comeback in 2025. Because it doesn’t depend on user-level tracking, MMM remains compliant with privacy regulations and cookie deprecation. MMM offers a durable, privacy-safe measurement framework for brands seeking long-term insights into marketing effectiveness.

How does MMM complement incrementality testing and experimentation?

MMM provides a top-down, long-term perspective, while incrementality tests (like geo-experiments or A/B tests) validate specific short-term causal effects. Together, they form a holistic measurement ecosystem — MMM identifies macro trends, and testing validates micro-level changes. A recent survey from Kantar found that more than half of teams are combining MMM with other tools like attribution or experiments. This blended approach is becoming the norm.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)