If an ad is shown on your TV but no one is home to see it, does it make an incremental impact?

The answer, it turns out, depends on how it’s being measured.

In the first two installments of our incrementality school series, we discussed the concept of incrementality and the kinds of questions it can help answer.

We learned that incrementality is “just the non-scientist-friendly way of saying causality” and that “marketing without knowing your incremental returns is just lighting money on fire”.

Yikes. Given destroying currency is a crime, we'd say it’s worth figuring out what’s incremental and what’s not — lest we waste away in prison for an ill-measured sock ad.

Attribution as an "incomplete and inaccurate solution"

Before we dive into the wonderful world of randomized controlled trials, let’s take a look at the most common tools marketers are using today to drive media buying decisions: Google Analytics and platform reporting.

These tools are cheap and easy to implement but the convenience has a hidden cost: Google Analytics supports the narrative that paid search is the most effective advertising channel, while platform reporting is known for suggesting that advertising is somehow collectively driving more sales than appear on the business' P&L.

These tools belong to the “attribution” class of measurement products, implying they can appropriately attribute credit to the ads responsible for driving sales. Yet such products are largely unthinking and rules-based, relying on tracking digital ad interactions, which are then linked to web conversions via cookies – akin to invisible stalkers that live in your web browser, waiting and watching from the moment you see an ad until you buy.

Attribution products don’t care if the customer is metaphorically “home” when the ad is served — if a cookie is dropped and still stored in the browser when a customer converts, the ad is credited, regardless of whether the person who converted looked at the ad, let alone was influenced by it.

“Ad served. User convert. Ad get credit.” (Best read with the voice of Hulk in mind)

Multi-touch-attribution (MTA) software that intelligently splits credit across all advertising was once hailed as the cure-all for these limitations, but as Feliks Malts, a Solutions Engineer at Haus puts it: “The rise of consumer privacy policies and loss of data availability that followed has rendered MTA an incomplete and inaccurate solution going forward.”

Not only are MTAs largely blind to ad interactions that don’t result in a click (e.g. impressions), but they also lack the ability to connect these touchpoints to sales that happen off-site (e.g. Amazon or in-store). (Hulk has been blindfolded by regulators and stripped of his favorite kind of cookies — it’s not a good environment for accurate smashing… err, recognition of causal impact.)

In short, while these tools check an optimizing-day-to-day-performance box, they don’t tell a story rooted in causality — in other words, whether a campaign is incremental to your business.

Media modeling shortcomings

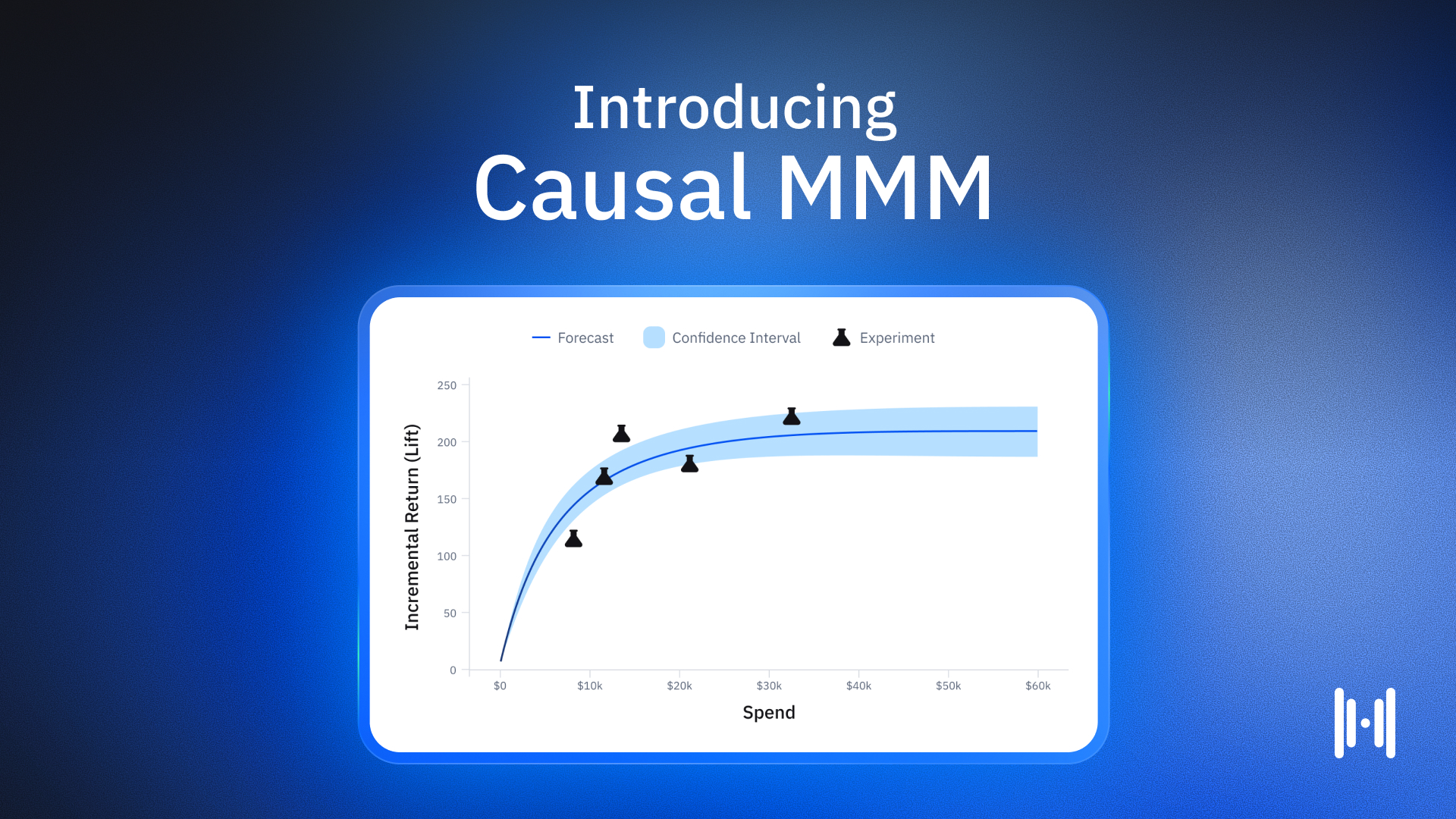

If the basic logic of attribution is too pedestrian, wait for this one: A traditional (aka, not causal) media mix model (MMM) is filled to the brim with opaque mathematics that’ll make your head spin.

Albeit a powerful tool for legacy consumer packaged goods (CPG) brands with global distribution and decades of historical data, traditional MMMs applied to modern brands are all too often a CPG-shaped peg in a DTC-shaped hole.

While an MTA identifies relationships based on clicks and conversions, a traditional MMM doesn’t like to get its hands dirty dealing with pixels and messy user-level data. With just two variables — spend and sales – one can build a traditional MMM using linear regression. By identifying how sales respond to shifts in spend on a channel over time, the model aims to predict how increasing budget on a channel will impact sales.

The trouble is that linear regression cannot prove causation on its own. It can illustrate correlations, but it doesn’t define which way the arrow of causality points.

For example, you could decide to ratchet up your connected TV (CTV) spend every time your business achieves a new record-high sales figure. Unchecked, a traditional MMM would pretty soon tell you that if you want to increase sales, you should increase your spend on CTV, even though the chain of causality in this case was exactly the opposite.

Statistical hazards like this cause a lot of mental pretzels for marketers trying to apply these models to their business. Absent a deep understanding of the math behind traditional MMM, the best we can do is gut-check what it’s recommending. If it agrees with our expectations, we accept it; if it disagrees, we reject it. In either case, we haven’t learned anything new. However – pair MMMs with experiments – and a glimmer of promise emerges. (Pssst. Learn more about our forthcoming Causal MMM here.)

Just test it

Many of us have been burned by negative experiences with attribution and traditional MMM solutions, but they’re not entirely hopeless. As Feliks will tell you, “These solutions are only as accurate as the incrementality tests calibrating them.”

While both attribution and traditional MMM tools are inherently biased and flawed – the former due to its love for clicks and the latter in its reliance on correlation — they can be saved and harnessed for good. All they need is the assistance of an old-fashioned experiment. Or, in the case of Haus, a new-fashioned one.

An incrementality test is just short for a randomized controlled trial — the gold standard for evaluating the effectiveness of medical interventions. It’s the most accurate and simple way to understand the cause-and-effect relationship between an intervention and an outcome.

In a geo experiment – the primary type of experiment we run here at Haus – we randomly assign markets across a specific country to receive the treatment (advertising on a specific channel) or the placebo (no advertising). By analyzing the lift in sales for the markets receiving ads, we’re able to determine their true, causal impact.

In other words: Unless the TV ad playing in an empty home set off an inexplicable chain reaction that made someone want to buy your product, an incrementality test wouldn't attribute any credit to it — just how it should be.

And that is the beauty of experimentation. For more on the types of business, brands, and teams that are ideal candidates for incrementality testing, stay tuned for the next installment of Incrementality School.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)