From Guesswork to Causal Truth: Measurement Lifer Feliks Malts’ Best Practices for Incrementality Testing

Haus' Feliks Malts has partnered with hundreds of teams on their incrementality testing programs. Here's how he ensures they're set up to drive real business impact.

•

Jan 28, 2026

.png)

After years of building and leading analytics and experimentation practices at agencies like DEPT, Haus Principal Solutions Consultant Feliks Malts has seen how bad experiments can erode confidence, waste budgets, and stall growth.

In this edition of Haus Spotlight, Feliks lays out his best practices for effective incrementality testing — from embracing continuous testing to getting thorough with test design. He also shows how a commitment to precision and velocity can turn incrementality testing into a true decision-enablement layer, not just another one-off report.

So without further ado, here is Feliks' advice for teams getting started with testing.

Understand your business’s testable media.

When a business looks like a good fit for Haus, Feliks’ team spends time learning about their business, showing them the Haus platform, and then asking for something specific: real data on current spend and business volume.

“That allows us to transparently tell them what percentage of their budget is actually testable, based on businesses of comparable spend and sales conditions,” explains Feliks. “We never want to bring on a customer under the illusion that they can test absolutely everything. We’d rather be upfront and tell them, ‘You’d need to be at this level, relative to your business size, to be able to test effectively in the channels you’re spending in.’”

Once they’ve confirmed there’s enough testable media, Feliks and his team build a 120-day roadmap that sketches out what the brand’s first few months with Haus could look like. More recently, they’ve used case study concepts from other customers to create one-page business cases that outline potential outcomes, actions, and impacts.

Test continuously.

Feliks maintains that the best teams are testing across their entire marketing ecosystem — or at least 80% of their investments. These are channels you can test during peak periods, seasonal periods, and softer periods alike, as well as at different spend levels.

“If you gave me just three or four tactics — say, Google, Meta, and YouTube — I could still build a roadmap spanning five or six months,” says Feliks. “Because we’re not just looking at channel performance; we’re looking at spend elasticity, creative impact, and optimization signals.”

Feliks has seen this process empower marketing leaders to make media more efficient, uncover growth opportunities, and confidently go back to their leadership in six to nine months and say, “We ran 15 experiments. As a result, we’re 20% more efficient and have grown 50%.”

Don’t cut corners in test design — running bad tests comes with real risk.

Feliks and his team talk with two types of businesses in the pre-sales cycle:

- Brands that have never done any incrementality testing — everything they’ve done so far is based on educated guesses.

- Brands that have done testing, sometimes even internally, and been burned.

“For the latter group, we often hear about inconclusive results,” says Feliks. “Or sometimes they’ll say they made a change based on a test, and it didn’t materialize as expected. That’s a real risk, because these experiences can lead teams to lose trust in incrementality testing altogether.”

When meeting with potential vendors, Feliks encourages teams to probe deeply into the vendor’s methodology and challenge them on why they’re more precise and transparent. Here are some questions he suggests asking a vendor:

“Is test design stratified or based on matched markets?”

“Stratification is a big deal,” says Feliks. “Stratified designs are truly nationally representative of the business. In matched market testing, you always had a data scientist who biased the test toward the markets they thought looked good.”

“Is the model markedly different from open source packages?”

“Many vendors are just building new UIs on top of those open source packages,” warns Feliks. “Often, potential customers compare us to open-source packages, so we make sure to highlight not just the scientific precision of our platform, but also the value businesses get from working with a dedicated team that’s already solved for all the edge cases they would otherwise have to handle themselves.”

“How do you handle precision?”

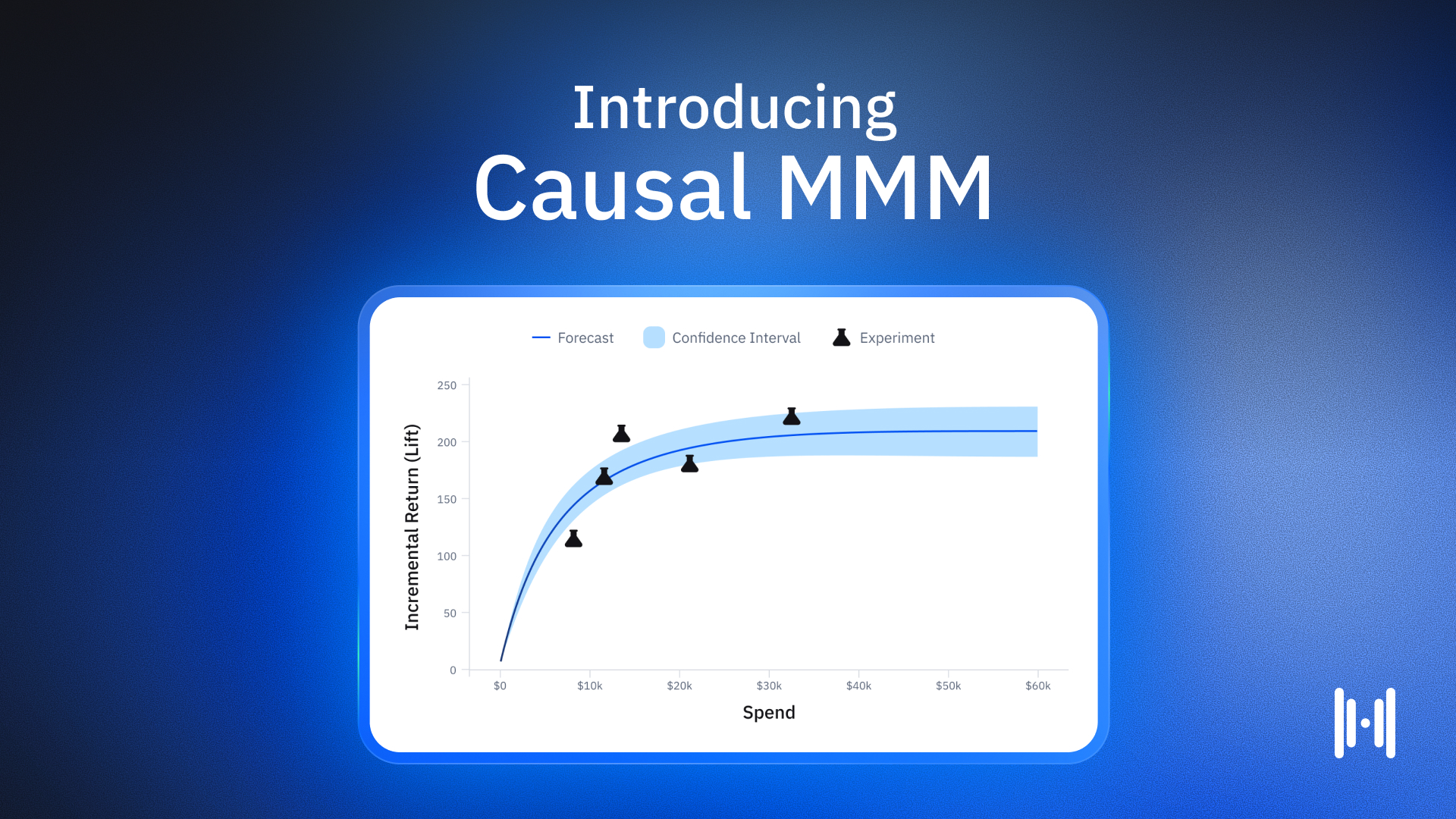

“When Haus tells you that you have a lift of 20% with a confidence interval of ±2, your lift is between 18% and 22%,” says Feliks. “Most other tools don’t provide that clarity because the open source packages they rely on aren’t transparent.”

For instance, a vendor might tell you that your statistical significance is above 90%, but that only means there’s enough variance in the data to get a statistical read — it doesn’t mean the estimate is tight. In reality, the confidence interval could be as wide as 20, meaning your 20% lift could actually be anywhere from 0 to 40%. If you ran the same test a month later, you’d likely get a very different number.

Velocity can be a force multiplier.

“A lot of businesses come to Haus for the testing velocity,” says Feliks. “We handle all the design work upfront, so you can create and launch a test in minutes, with results available as soon as the test concludes. The efficiency and automation that come with a SaaS model are huge advantages.”

This eliminates the slowdowns that often come from in-house incrementality testing: The back and forth of planning, the intricacies of test design, and the rounds of analyzing results.

Having an external partner like Haus frees growth leaders to focus on more impactful work for the business, like pricing analytics, LTV modeling, or customer cohort analysis.

“Marketing leaders can diversify their skill sets, expand their expertise, and rely on Haus to enable the marketing team to run tests accurately,” says Feliks. “Freeing them from the repetitive, manual work that internal testing often requires.”

Incrementality testing should enable decisions and measurable business outcomes.

Feliks finds that the most successful businesses don’t see Haus as a reporting platform — they see it as an experimentation platform that enables better decision-making.

“In times of uncertainty, when a company needs to cut spend or improve efficiency within an existing budget, we can build a roadmap tailored to that,” says Feliks. “The goal is to find where dollars can be reallocated in the media mix to get the most business impact for the same spend.”

Or maybe you’re a fast-growing brand that wants to throw gasoline on the fire. You might want a tool that helps you quickly understand which channels are expanding effectively, which ones aren’t, and where there’s room to scale.

“When we remind brands that Haus is a decision-enablement platform rather than a reporting tool, it clicks for businesses that this isn’t just a shiny dashboard,” says Feliks. “It’s a platform built to help them make smarter, faster, and more confident decisions that drive measurable business results.”

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)